Project Details

Overview

In June 2018, Luminate commissioned Simply Secure to conduct human-centered design (HCD) research focused on uncovering grantees’ experiences of the funding process. The research focused on three main questions:

What pain points offer opportunities for improving grantees’ experience?

What actionable recommendations are priorities for GCE to address?

What steps can be taken to build continuous HCD research into GCE’s processes?

Simply Secure built confidentiality and anonymity into the research process, so as to solicit authentic, critical feedback. The report highlights feedback from 20 interviews + 53 survey responses, including anonymized quotes and comments.

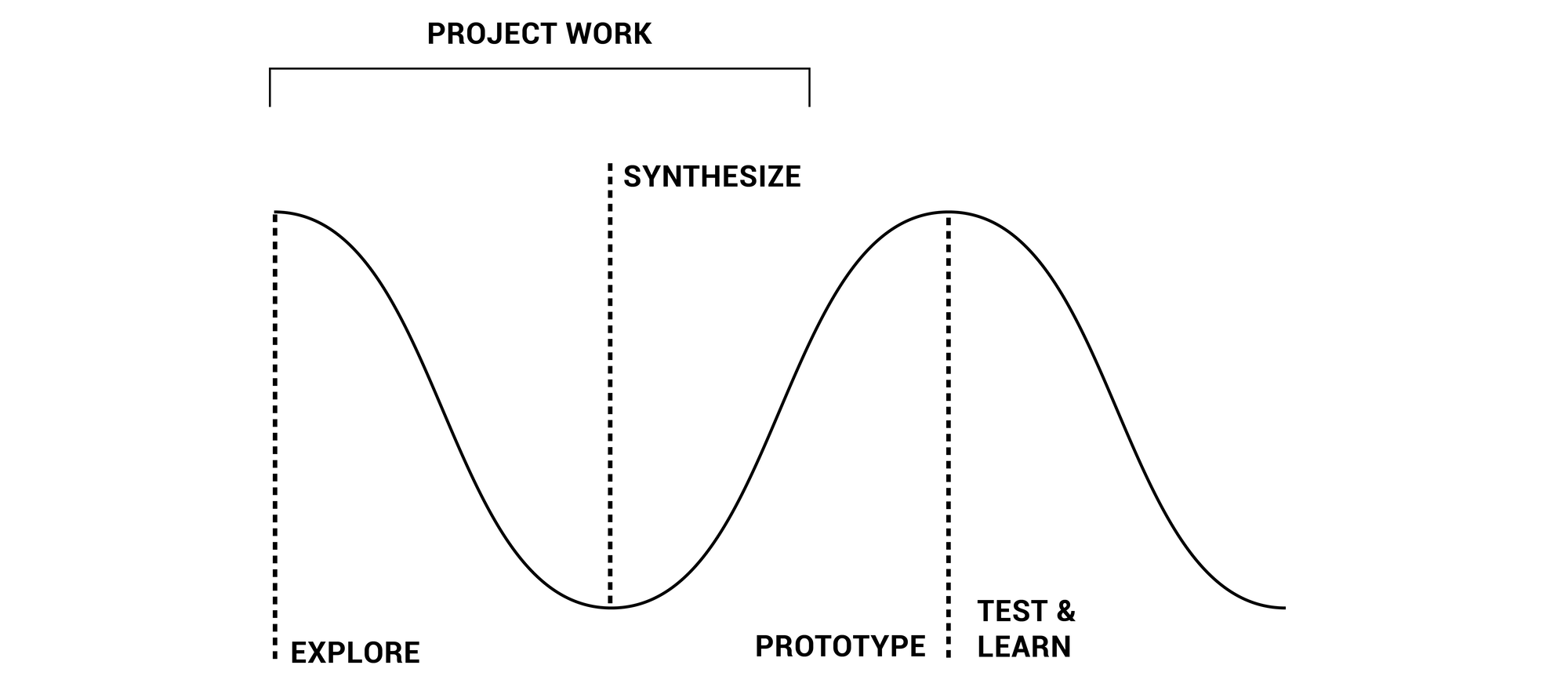

HCD methods are applicable in any part of a product or service development process, from initial needfinding through prototyping and feedback. The project was early-stage, formative work focused on surfacing the needs of grantees. This work could potentially be continued in the future to further develop the recommendations. Additional HCD methods can ensure the research participants’ needs stay central in the later design and implementation process.

The findings in this report have been informed by feedback from Luminate’s grantees and do not include responses from for-profit organizations in the portfolio. Please see Methodology section below for more information.

As this report is based on interviews and data collected from grantees between July and September 2018, it is a reflection of grantees’ perspectives on the experience they had while funded by Omidyar Network’s GCE initiative and the recommendations have been developed according to experiences with Omidyar Network’s processes and dynamics (rather than Luminate’s). These learnings can therefore be seen as a useful baseline for Luminate to evaluate itself against going forward.

HCD methods are applicable in any part of a product or service development process, from initial needfinding through prototyping and feedback. The project was early- stage, formative work focused on surfacing the needs of investees. This work could potentially be continued in the future to further develop the recommendations.

The report highlights feedback from 20 interviews + 53 survey responses, including anonymized quotes and comments.

Methodology

Simply Secure’s approach is values-based and leverages human-centered design practices throughout our work. Three values that particularly informed the approach on this research are:

1. Confidentiality

Forming a rapport strong enough to get potentially critical information about an influential funder from a tech-savvy audience required developing a robust set of confidentiality practices.

Participant data was stored only on encrypted devices.

Temporary, purpose-specific email accounts and calendar items were used, and have been deleted upon finalizing the research.

Aliases were used for participants in all unencrypted electronic communication (Slack, Google Apps, scheduling). The only link between the alias and the participants’ names was on an encrypted drive and in handwritten notes.

Before the interviews, each participant received a data handling statement via email.

During the interviews, we only took handwritten notes to decrease the digital footprint.

We selected and used an open-source platform called LimeSurvey because of its strong privacy-preserving policies.

The entire project (data collection, storage, and processing) is GDPR-compliant.

We believe these policies, which can be found in the Resources section on the report website, can be a model for the participants’ communities, as well as others seeking to do HCD work in sensitive situations.

2. Openness

Our practice is to share openly as much as possible from our work, e.g., data, insights, and techniques — while also respecting participant and collaborator privacy and security. This allows us to reflect how much we value participant input. For this work, the insights from this research are shared as a public report. The techniques and methods used for privacy-preserving user research are shared in the Resources section on the report website.

3. Empathy

Human-centered design (HCD) is grounded in empathy. In an HCD process, we take the approach that understanding people’s experiences, needs, and values sets the foundation for improving the world they live in.

HCD research methods often take the form of semi-structured interactions, e.g., interviews, design workshops and exercises, and participatory methods. These techniques surface participants’ priorities and values by giving them control over the focus of the research. Each interaction is a unique response to each participant, tailored to their individual concerns. HCD methods aren’t entirely open-ended; a common overall structure allows researchers to draw out parallel themes and insights.

Why human centered research?

Human-centered research is about people, and about the impact that a program, a process, or a technology has on those people’s lives. Human-centered research generates actionable insights to improve an organization’s offerings. In contrast to a top-down process which emphasizes the priorities of a funder, human-centered research as applied to philanthropy offers the opportunity to understand not just how a given grant impacts an organization, but how the relationship between funder and grantee shapes the health of the nonprofit sector as a whole.

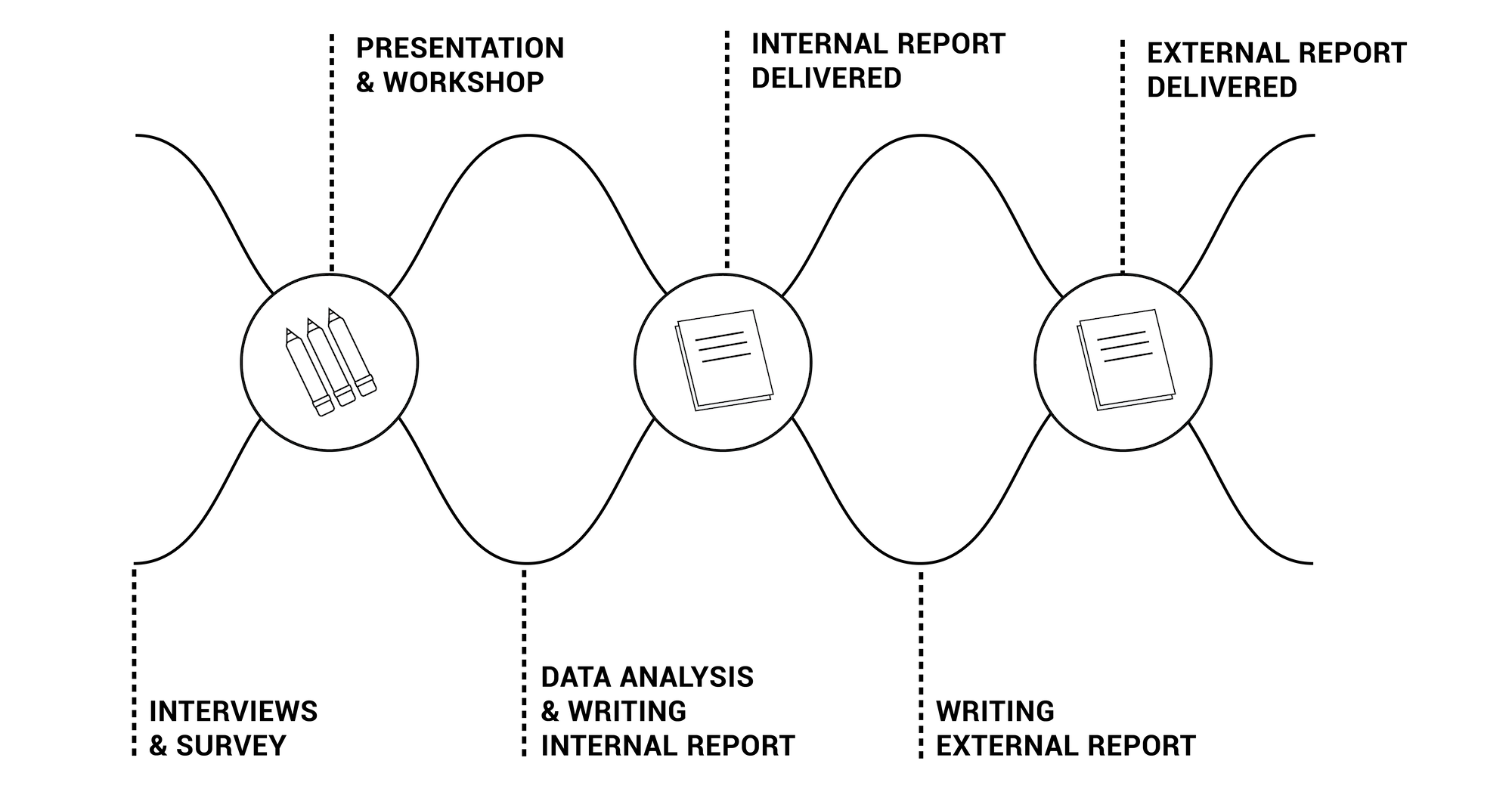

Simply Secure’s approach to human-centered research is grounded in respect for participants and dynamic adaptation to the ongoing insights that emerge from the research process. A mix of qualitative and quantitative methods was employed: the core of this work was a series of semi-structured, in-depth, participant-led interviews. The interview guide contained open-ended questions and clear topics to cover, but remained flexible enough for the participant to direct time and attention to their—rather than Simply Secure’s—priorities. New questions that took shape during the interviews were then used to shape a survey. The survey contained more targeted questions, and was distributed to a larger group, in order to understand how these emerging questions and opportunity areas resonated with a broader audience.

Overall, this human-centered approach to research allowed us to have critical conversations around important relationships with the participants and adapt as needed to the issues participants raised. A human-centered lens is ideal for this type of research as it allows for better understanding of the participants’ context and environment, as well as the specific process or practice that is the focus of the research.

Limitations

Although this project uses best practices for human-centered user research, there are some limitations to the approach.

The response rate for both the interview requests and the survey were high overall, but the response rate from for-profit organizations was low. Although the sentiments from the participants were consistent across both for-profit and non-profit organizations, the research team decided that it would be better to remove the for-profit responses from the data, so as not to inappropriately over-generalize the insights. The research team has connected with the for-profit participants to let them know.

Drawing conclusions from small-scale samples has risks. These risks can be mitigated, e.g., through a mixed-methods approach using additional methods such as surveys and data analysis to triangulate findings.

Researcher bias in interpreting and prioritizing the results is another risk. To mitigate the risks of bias, two researchers participated in every interview, and the interviews were complemented with a survey.

This phase of research did not include GCE alumni. If further work is pursued to focus on the end of the grantee-funder relationship, then alumni input would be critical to better understand and shape future guidelines.

Self-selection bias may have impacted who we heard from in the interviews and the survey. Organizations who felt their relationship was particularly strong or particularly terrible with GCE might not have chosen to participate, feeling that their feedback was not needed (in the case of a strong relationship) or wouldn’t be heard (in the case of a bad relationship). To mitigate this, organizations were invited to participate multiple times, by both Simply Secure and GCE, and the outreach communications covered concerns around confidentiality and privacy.

Notes

Luminate funds both non-profits and for-profits and refers to both collectively as investees. Due to removing for-profits from the participant population, we have used the language of “grantee” in this report.

Due to the confidentiality practices in place, it was not possible to connect quotes back to organizations, so although for-profit organizations are not represented in the participation rates and overview of the survey participation below, quotes from for-profits may be included alongside the quotes from grantees.

Simply Secure conducted research prior to the Luminate launch in October 2018, so the respondents shared their perceptions of the Governance & Citizen Engagement (GCE) initiative at Omidyar Network, as it was at that time. In this report, we have reframed current efforts around Luminate, and otherwise referenced “GCE” or “Omidyar,” matching participants’ language about past experiences.

Participants often used the terms “GCE,” “Omidyar,” and “ON” interchangeably.

Interview Participation Overview

The interviews consisted of open-ended questions about the organization’s relationship with GCE. Interviews were 45-90 minutes long, depending on the respondent’s availability.

We conducted 20 interviews: 17 with current grantees, and three with other people of interest, e.g., sector experts or unfunded organizations. All interviews were conducted by two people from Simply Secure. Six were in person, and 14 were remote. The majority of interviews involved one participant from the organization.

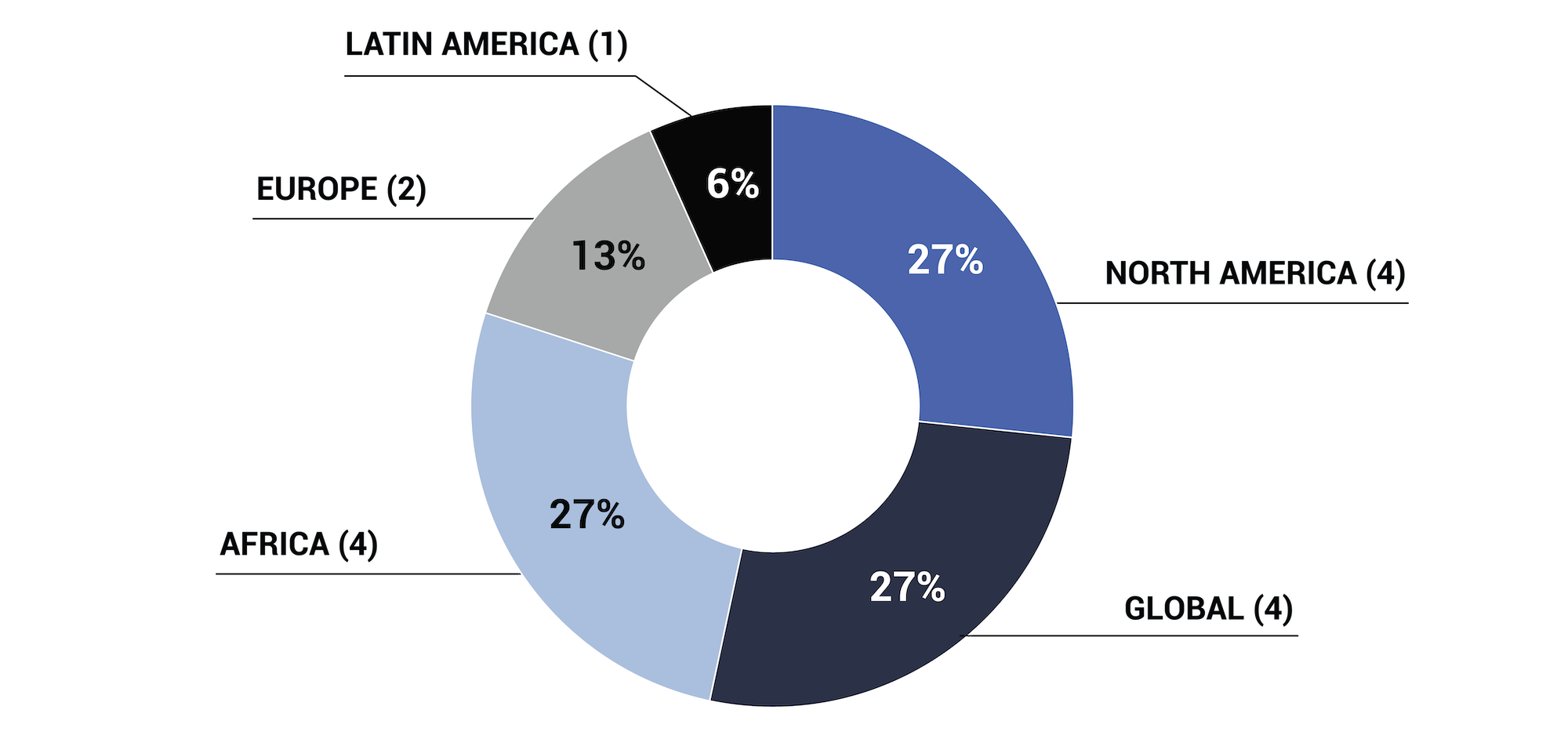

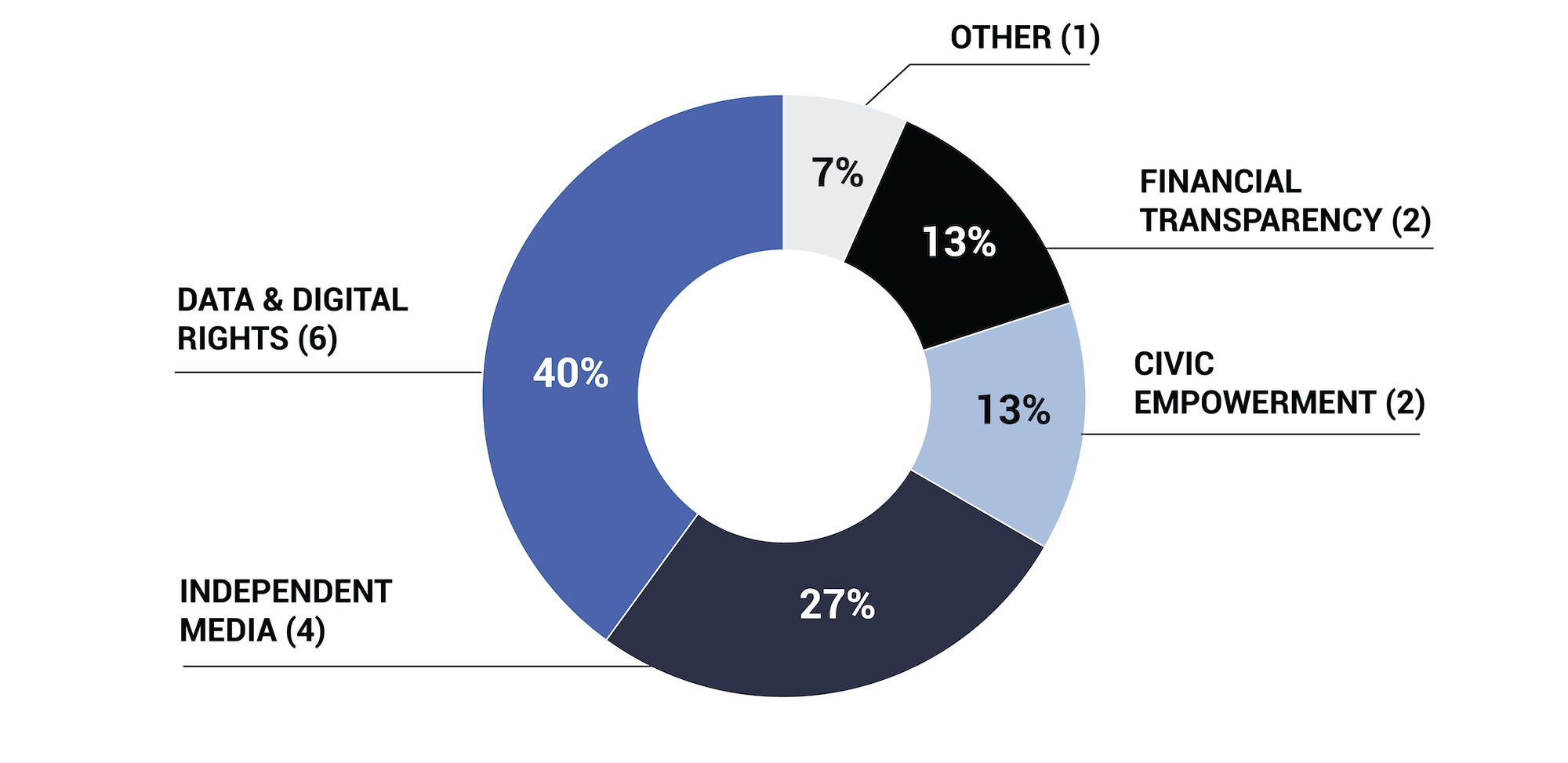

We used stratified random sampling to select participants from the list of existing grantees. We invited participation proportionally from the following groups in order to ensure representation:

Location

Non-profit / for-profit

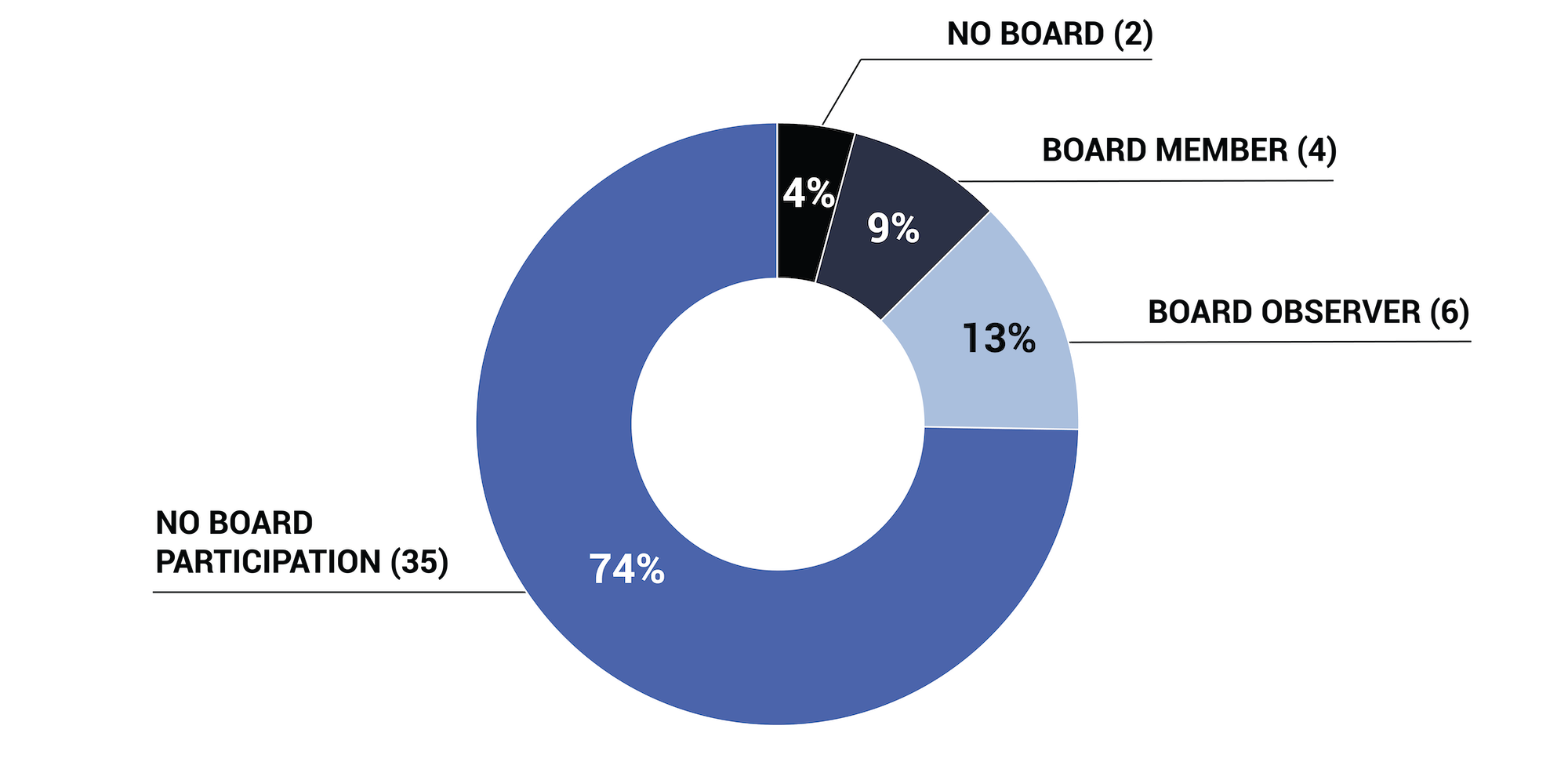

No board member from GCE / board observer from GCE / full board member from GCE.

Impact area

Funding amount

Initial funding year

We reached out to 35 people, of whom 20 responded (a 57% response rate). Of the 20 who responded, 2 were for-profit organizations, which have been removed from the data counts.

Survey Participation Overview

The survey was designed to interrogate some of the emergent insights from the qualitative study. It focused on the following topics:

Requested needs in portfolio support*

Perceptions of GCE as a funder

Communication and transparency

Ease of reporting

The experience of non-profit grantees vs. for-profit investees

*Specifically earmarked support, above and beyond grant funding, to build organizational capacity and resilience. Examples include executive coaching, fundraising support, digital security trainings, or diversity, equity and inclusion (DEI) reviews.

We sent a survey to 122 organizations that were part of GCE’s portfolio at the time of the research.

53 out of 122 organizations responded (~43% response rate).

We asked each organization to only respond once.

14 of the 53 responses came from the 17 organizations we interviewed.

For-profits had an extremely low response rate on the survey. Due to this, the survey findings cannot be used to validate the difference in experience between for-profits and non-profits.

Note: Interviewee charts do not reflect interviews with three additional people of interest.